Functional Calculus

Before continuing, we need to have a basic understanding of the operations of functional calculus. Functional calculus is an extension of the ideas of multivariable calculus to problems in the framework of calculus of variations. My primary interest in functional calculus comes from the study of spatially ecology, and other mathematical biology topics, but it's also a powerful tool of modern physics [Donoghue'96] and these examples may be more familiar. A rigorous theory of functional calculus is a challenging topic, and I am not qualified to provide formal justification for the basic rules. But the basics aren't too hard to grasp, so let's explore.

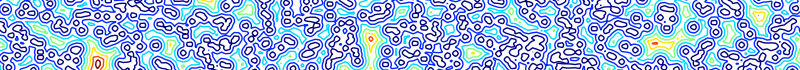

The basic idea is to extend the concept of a gradient from vectors indexed over a finite set to functions indexed over a continuum using Dirac delta-functions \(\delta(x)\) and their derivatives. Let \(x\) correspond to a position on a line and \(\theta(x)\) be a function defined on that line. The operator \[ \frac{\delta W[\theta]}{\delta\theta} \] is the functional calculus equivalent of a Jacobian. We have replaced the standard Leibniz notation of \(d\) with \(\delta\) to emphasis the difference between classical and functional derivatives; sometimes \(\mathcal{D}\) is used instead. The functional derivative of \(W[\theta]:=\theta(x)\) is \begin{gather} \label{eq:ddef} \frac{ \delta \theta(x)}{ \delta \theta(y)} = \delta( x - y). \end{gather} The dummy index \(y\) represents the component of \(\theta\) we are differentiating with respect to. In this case, the functional derivative is a delta-function peaked when \(x\) coincides with \(y\) but zero otherwise.

In many cases, differentiation will be applied to an integral. For instance, \begin{gather} \frac{ \delta }{ \delta \theta(y) } \int_0^1 \theta(x) dx = \int_0^1 \delta(x-y) dx = \begin{cases} 1 & \text{if \( y \in ( 0, 1)\)}, \\ \text{context-sensitive} & \text{if \( y \in \{ 0, 1\}\)}, \\ 0 & \text{otherwise}. \end{cases} \end{gather}

Most of the standard rules of calculus hold for functional differentiation. The derivative of constant function \begin{gather} \frac{\delta f(x)}{\delta \theta(y)} = 0. \end{gather} The product rule holds, so for example \begin{gather} \frac{ \delta \left[ \theta(x) \theta(x - 2) \right] }{ \delta \theta(y) } = \theta(x-2) \delta(x - y) + \theta(x) \delta( x - 2 - y) \end{gather} and when we extend the product rule to powers, \begin{gather} \frac{ \delta \theta^n(x) }{ \delta \theta(y) } = n \theta^{n-1}(x) \delta(x - y). \end{gather} The chain rule implies \begin{gather} \frac{ \delta \left[ f(\theta(x),x) \right] }{ \delta \theta(y) } = \frac{ \partial f(\theta(x),x)}{\partial \theta} \; \delta(x-y). \end{gather}

In addition to the standard rules of differentiation, the continuity of the index \(x\) introduces some properties that are particular to functional calculus. For instance, using integration-by-parts, we can show that \begin{multline} \frac{ \delta }{ \delta \theta(y) } \int f(\theta'(x)) dx = \int \frac{\partial f}{\partial \theta'} \frac{\delta \theta'(x)}{\delta \theta} dx \\ = \int \frac{\partial f}{\partial \theta'} \delta'( x - y ) dx = \left. - \frac{d}{dx}\left[ \frac{\partial f}{\partial \theta'} \right] \right|_{x = y}. \end{multline} We derive rules for functions of higher order derivatives using similar methods. We've abused notation in a way that's conveniently allowed us to exchange the orders of some operations, which is something you always have to be careful about, but we fall back on the rule-of-thumb to "shoot first, ask questions later".

The rules of functional differentiation are applied to determine critical points of functionals, which may be local minima, local maxima, or saddles. Given a functional \begin{gather} W[ \theta ] = \int f( \theta(x), \theta'(x), x ) dx, \end{gather} the critical points satisfy \begin{gather} \frac{ \delta W[\theta] }{ \delta \theta(y) } = 0 \end{gather} for all \(y\). Evaluating the functional derivative, \begin{multline} \frac{ \delta }{ \delta \theta(y) } \int f( \theta(x), \theta'(x), x ) dx = \int \frac{\partial f}{\partial \theta} \frac{\delta \theta(x)}{\delta \theta(y)} + \frac{\partial f}{\partial \theta'} \frac{\delta \theta'(x)}{\delta \theta(y)} dx \\ = \left. \frac{\partial f}{\partial \theta} \right|_{x = y} \left. -\frac{d}{dx}\left[ \frac{\partial f}{\partial \theta'} \right] \right|_{x = y}, \end{multline} which is just the Euler--Lagrange equation from calculus of variations.

We can now derive the wave equation from the principle of least action. The action of a vibrating string is the total kinetic energy minus the total potential energy, or \begin{equation} A[\phi] = \iint \frac{m}{2} \left( \frac{\delta \phi}{\delta t} \right)^2 - \left( k \frac{\delta \phi}{\delta x} \right)^2 dx dt. \end{equation} To minimize the action, we differentiate, and set the derivative equal to zero. Using functional differentiation, we can show that \begin{equation} \frac{\delta A[\phi]}{\delta \phi} = - m \frac{d^2 \phi}{dt^2} + 2 k^2 \frac{d^2 \phi}{dx^2} = 0. \end{equation} We thus have the standard second-order wave equation for further study.

Functional differentiation can also be used to develop series approximations of nonlinear functionals like the generalized Taylor series \begin{multline} W[ \theta(x) + \epsilon(x) ] = W [ \theta(x) ] + \int \frac{ \delta W[\theta(x)] }{ \delta \theta(x_1) } \epsilon(x_1) dx_1 \\ + \frac{1}{2} \iint \frac{ \delta^2 W[\theta(x)] }{ \delta \theta(x_1) \delta \theta(x_2) } \epsilon(x_1) \epsilon(x_2) dx_1 dx_2 + \ldots . \end{multline}