Risk Resonance

Resonance is a compelling component of the study of mechanical systems, as it helps use identify "special situations" where a monotonic perspective on parameter dependence fails, perhaps un-expectedly.

Despite it's clear importance in mechanics, there is little discussion of the importance of resonance in probabilistic systems, as far as I can tell. This seems something worth correcting.

First, we won't be able to find a perfect parallel -- mechanical resonances depend on forcing, momentum, rotational effects, and superposition, which we won't find in simple probabilistic models. But if we widen our views a little, we can still make some progress. Classical resonance appears because a system has a natural frequency, which is interacting with a forcing frequency. When these two frequencies are out of alignment, oscillation amplitudes respond monotonely; a faster forcing frequency leads to a smaller amplitude response. But in ranges where the frequencies coincide, we see a peak in the amplitude of oscillations, which we call resonance.

One analog for this in a first-order random system is how the probability of an outcome responds as the risks of auxillary pathways are varied with respect to some natural background pathways. The simplest such example I can think of is a 4-state continuous-time Markov chain with two possible outcomes. Here's a diagram.

We start at state 1, and ask, "What's the probability of ending up in state 4, rather than state 3?" A simple calculation shows the probability of ending up in 4 is \[\frac{cd}{(a+c)(b+d)}.\] This probability responds monotonely to each of the parameters. Increasing rate \(c\) or \(d\) increases the chance of reaching state \(4\). Increasing rate \(a\) or \(b\) decreases the chance of reaching state \(4\). This behavior is actually very general -- with a simple application of Cramer's rule for solving linear systems, we can show that the probability of any outcome will respond monotonely to perturbations of any individual transition rate.

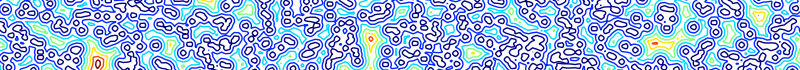

However, if the rates are related to each other, the relationship may become more complicated. For instance, suppose that \(a\) and \(d\) are always equal, so that the chance of reaching state 4 is actually \[\frac{ca}{(a+c)(b+a)}.\] Now, if we start with \(a=0\), there is no chance of getting to state 4. Increasing \(a\) a little increases the probability of reaching state \(4\). But as \(a\) get's really big, the chance of getting to state 4 again goes back to zero. Turns out, we are most likely to get to state 4 if \(a = \sqrt{bc}\)! For example, if \(b=c=1\), ...

This is risk resonance. It's a situation where auxillary pathways can be tuned relative to natural pathways to maximize or minimize the chance of a particular outcome. Another example is the case where two 1-directional random walks collide.

The probability of reaching the red state when we start at the upper left blue state is \[ \frac{6 a^2 b^2}{(a+b)^4} \] which is maximized when \(a=b\).

The take-home? When 2 or more transitions in a Markov process are dependent on a common factor, outcomes may not respond monotonely to factor perturbations -- changes that reduce risk in one setting may increase risk in a separate setting. Identifying situations of potential risk resonance will help us prevent or manage it.