Apple VS FBI

A few days ago, Apple posted a letter to their customers about a judge's order that they help the FBI. There are some weird things about the particulars of the case, and of course, some passionate opinions among a few. But two things about this come to my mind.

Pebbles cause avalanches

First, allot of the discussion appears to be about the juxtaposition of two things -- the benefit the FBI's counterterrorism efforts will get from reading the data on this one iphone, verses the potential consequences of a new decryption tool being constructed by Apple. It is tempting, in common conversation to say that Apple is arguing to dismiss a tangible benefit in the present for the sake of some intangible hypothetical about the future, and that it's nonsense to make this kind of argument -- we can deal with Apple's intangible issues in the future when they become tangible. I have no legal expertice, but feel like lawyers are often prone to making these kinds of arguments.

I just want to point-out that this is a real trade-off, and that there are many situations in our lives and history where small events have lead to large, irreversible changes in the world. We deal with this all the time in theoretical biology and mathematics, but I think it's something that's ignored in the general public conversation and shouldn't be.

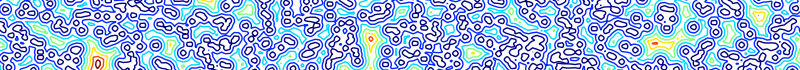

A simple and intuitive example of a small thing that becomes a big thing is an avalanche. Drop a pebble on a mountain side. That pebble knocks some other rocks loose, which hit more rocks and more rocks, until soon the whole side of the mountain is falling down. Of course, not all pebbles start avalanches. In fact most don't. It has to be a pebble at the right place at the right time. But some pebbles do. Really, the mountain-side is what controls the avalanche's potential, and the pebble is just the initiating event. But there are lots of other examples. For instance, the 2014-2015 Ebola epidemic in East Africa started with one child, but lead to almost 30,00 cases. And WWI started with just one person being shot.

We can understand why this single small events lead to big changes -- allot of people like me study these very things. In this case of Apple vs. the FBI, I think we can understand as well, what the consequences will be if we take some time to look and figure out if this is an ordinary pebble, or the pebble that brings down the mountain. The important thing is to be willing to look.

Day 5 of the AI rights movement.

Here's an interesting thought experiment. Would we feel different about the FBI's request if instead of an iphone, it was asked of Data or Sonny from I, Robot? We might be a little uncomfortable with that -- a humanoid robot pleading with us. Should AI have the same rights as humans? We have been working on intellegent machines for several generations now, and some might argue we've already reached that point. Many people carry around machines in our own pockets that, in their own way, are smarter than us. After all, who among us is smart enough to beat their phone at Chess? We will probably say "That's not the same thing!", but just because AI has turned out different from us shouldn't be a surprise and doesn't make it any less intelligent. Perhaps what the judge is saying here is that the FBI has the right to thought police all artificial intelligences, and that we and they have no universal right to private electronic thoughts living or dead, like the NSA has been said to be doing for people.

Thoughts, Mr Orwell? Of course, people can go either way on this, if one does concede the legal equivalence of human and artificial intelligences. After all, the US government's use of torture has just been another way of saying that we Homo sapiens ourselves don't have an ultimate right to the privacy of our own thoughts.

Update (2016-03-13)

A few days ago, President Obama spoke at south-by-southwest, and people have written commentaries like this one.

It's hard not to be sympathetic to the president's position. I want the world that he's proposing we should have. But I also think his advisors are replacing reality with magical thinking. Let me quote from his interview.

And the question we now have to ask is, if technologically, it is possible to make an impenetrable device or system where the encryption is so strong that there’s no key, there’s no door at all, then how do we apprehend the child pornographer? How do we solve or disrupt a terrorist plot? What mechanisms do we have available to even do simple things like tax enforcement? Because, if, in fact, you can’t crack that at all, government can’t get in, then everybody is walking around with a Swiss bank account in their pocket -- right? So there has to be some concession to the need to be able to get into that information somehow.

Now, what folks who are on the encryption side will argue is any key whatsoever, even if it starts off as just being directed at one device could end up being used on every device. That’s just the nature of these systems. That is a technical question. I’m not a software engineer. It is, I think, technically true, but I think it can be overstated.

And so the question now becomes, we as a society -- setting aside the specific case between the FBI and Apple, setting aside the commercial interests, concerns about what could the Chinese government do with this even if we trusted the U.S. government -- setting aside all those questions, we’re going to have to make some decisions about how do we balance these respective risks.

And I’ve got a bunch of smart people sitting there, talking about it, thinking about it. We have engaged the tech community aggressively to help solve this problem. My conclusion so far is that you cannot take an absolutist view on this. So if your argument is strong encryption, no matter what, and we can and should, in fact, create black boxes, then that I think does not strike the kind of balance that we have lived with for 200, 300 years. And it’s fetishizing our phones above every other value. And that can’t be the right answer.

I suppose that's exactly what the argument above would do -- fetishize the phone as an intelligent being.

If we really want to abandon fetishizing of phones and computing devices in general as special things, than we might want to also abandon our fetishizing of human sapience, since we are being mastered by our creations. Perhaps we should also abandon our fetishes over the universal sacredness of human life, which we treat so in-consistently? After all, perhaps it's really more about what our technology wants, than what we ourselves want that matters.

The president's approach focuses on the hierarchical aspects of the problem, trying to find some balance, and failing to ask the question of whether this is a convex minimization where an intermediate balance makes sense, or if this is a concave minimization problem (or something harder) where false balances can collapse to un-desireable outcomes. In this particular case, the philosophical approach acknowleges, but dismisses without explanation, the power of information assymetry in the game of life. Might it not be more reasonable to argue that we can not remove assymetry -- only change it's balance. The way to make this assymetry fair for citizens might be to empower all people with it, and then design the rules of the game to force people to reveal the information we need to run a country, such as tax information.