Fiber-bundles for root-polishing in two dimensions

In some of the theoretical biology modeling I do, we try to keep problems low-dimensional. A useful model is one that we can understand intuitively, and high-dimensional spaces are not usually intuitive. Usually, this means 1-dimensional. But I'm always running into situations where there's some equation that we want to solve with a few more dimensions than 1. These situations can be rather frustrating because the problem is still, in some sense, simple, but the only numerical tools we have for solution are very general, and sporadically unreliable -- they may work most of the time, but 1 in every 20 times they fail, and I've got little idea why they fail in that particular situation, or how to fix the problem.

Root-finding is a great example of my problems. If you check out wikipedia, there is an elaborate discussion of different approaches for scalar root-finding, with various pros and cons discussed. But as soon as you go from 1 dimension to 2 dimensions, there's nothing to say any more. Sure, there are arbitrary-dimensional approaches such as Ptolemy's generic fixed-point iteration scheme, and the vector version of Newton's method. But Ptolemy's method only find's stable fixed points, and Newton's method can fail for less-obvious reasons. You would think there's something useful to say between the 1-d cases and the n-d cases.

Numerical Recipes has a helpful discussion about the difficulties of root-finding in two or more dimensions. There are some ideas out there, like multi-dimensional bisection methods, but it doesn't get us far. High-dimensional root-finding is a difficult problem. But so is minimization in an arbitrary landscape, and we tackle such problems all the time these days, with seeming success. In fact, one can make a convincing argument that root-finding in one dimension is much easier than minimization in one dimension. So, why aren't there some better ways?

In the summer of 2013, I was studying a two-dimensional nonlinear, black-box, root-finding problem, and discovered a useful numerical approach, based on a fiber bundle decomposition of the 2-dimensional space into 1-dimensional fibers where fast scalar root-finders could be employed. This idea was probably first discovered decades ago by somebody else, but their work has unfortunately not recieved enough promotion to reach my ears. So, let me give an outline of my version of the idea, and hopefully, we can get things a little more attention.

I would like to solve for the fixed point of a map represented by a system of two equations \begin{gather} x_{t+1} = f(x_t,y_t), \quad y_{t+1} = g(x_t, y_t). \end{gather} When $f$ and $g$ are both smooth, we expect our map to behavie linearly near a fixed point $(x^*, y^*)$. So, let's make some weakly non-linear vector fields to use as examples of our problem.

Now, the basic idea is that we want to somehow use all our wonderful 1-d methods to solve this 2 dimensional equation. Unfortunately, the way to do this isn't immediately clear. In minimization problems, we can take a cross-section of the potential, find the 1-d minimum, and then track that minimum as we vary the cross-section. But with root-finding, when we take a cross-section of the vector field, there are no local stationary points usually. So, we have to induce some kind of local structure on this cross-section fiber that we can then study accross fibers.

One naive classical idea is to study local displacement from the fiber. If we calculate the magnitude of the relative displacement of the vector field at each point, then \[ (x^*, y^*) \in \operatorname{argmin}_{x,y} ( f(x,y) -x )^2 + (g(x,y)-y)^2. \] Thus, we can use optimizaiton methods to solve for the root. But like I mentioned above, optimization methods are relatively in-efficient in one dimension, compared to native root-finding methods. Isn't there some better way, which uses more information from the vector field besides just the magnitudes?

Broyden's method and quasi-Newton methods can definitely do better than optimization, but they don't always converge. I'd like a method for two-dimensions with robustness like that of bracketting methods -- it might be a little slow, but you know it's going to work.

Here is a different idea that can work really well if you get lucky. Allong our cross-sectional fiber, look for places where the inner or outer geometric product with the fiber tangent-space vanishes. If $u$ is a vector in the tangent-space of our fiber, then the inner product vanishes when the vector field is perpendicular to the fiber, so \[ [ f(x,y)-x, g(x,y) - y ] \cdot u = 0.\] The outer product vanishes when the vector field is parallel to the fibure, so \[ [ f(x,y)-x, g(x,y) - y ] \wedge u = 0.\] And the only place they both vanish is at a fixed point $(x^*, y^*)$. So, given a $u$, we can use scalar root-finding to construct a curve where the inner product vanishes, and then use scalar root-finding allong that manifold to find when the outer product simultaneously vanishes. Or, we can do things in the opposite order. Since, in all cases, the functions will be smooth, we can use super-linear methods both times, thus making 2-d root finding a super-linear process.

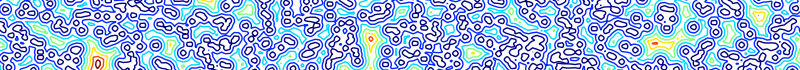

Of course, there are several ways the idea might fall down. We have to have a domain with a root already, and be tight-enough to the root that non-linear terms don't cause the scalar root-finding to fail. And what "tight-enough" actually means will depend on the orientation we've picked for our fiber bundles. We haven't even established the existence of our reduced-manifolds. I'll write more latter, but for starters, here's a picture.

The idea here can be extended to higher dimensions, although we lose a little more leverage due to the greater geometric flexibility in 3 and more dimensions. While outer-products are 1-dimensional in 2d, they are in generally $n-1$ dimensional objects in n-dimensional spaces. In 3 dimensions, we would first have to decompose the space into parallel planes, and then decompose these planes into lines.